TOP

我的荣耀 开启荣耀之旅

To log in to your account, you must first agree to the HONOR PLATFORM TERMS OF USE and HONOR Platform Privacy Statement. If you do not agree, you may only browse the site as a guest.

Ultimate Guide to AI Speech Recognition: Transforming Communication and Technology

Speech recognition technology has come a long way. It started with basic rule-based systems and has now evolved into AI-powered solutions that accurately convert spoken words into text. This comprehensive guide will discover the intricacies of AI speech recognition, its current trends, and the exciting future that lies ahead. Read along to see how these advancements can enhance your daily interactions with technology.

What Is AI Speech Recognition?

AI speech recognition, also known as automatic speech recognition (ASR), is a technology that enables computers and applications to understand and transcribe human speech into text. AI-powered speech recognition systems can analyze and interpret spoken language, allowing users to interact with technology using their voice.

Speech recognition systems are also getting better at handling different accents, dialects, and speaking styles, though this remains an ongoing challenge. Speech recognition also struggles in noisy places like offices or cars, reducing accuracy. Developers aim to improve this with multi-channel processing and speech enhancement techniques.

Artificial inteligence speech recognition is increasingly adapted across various applications. Some apps and use cases of AI speech recognition include:

● AI Virtual assistants like Alexa and Google Assistant for voice commands and queries.

● Transcription services use AI for speech-to-text conversion.

● Voice-controlled smart home devices for managing appliances and systems hands-free.

● Customer service automation for responses and improved call routing.

How Does AI Enhance Speech Recognition?

The integration of AI has vastly improved the accuracy and capabilities of speech recognition systems. Below are advancements that help AI enhance speech recognition.

Natural Language Processing (NLP)

Natural Language Processing (NLP) breaks down language into syntax, semantics, and discourse to analyze meaning and context in speech. Syntax deals with sentence structure, semantics with word meaning, and discourse with how sentences form coherent conversations or texts.

NLP powers virtual assistants, automated transcription, and language translation by understanding users, converting queries to text, and translating languages contextually. It also allows systems to grasp the intent and sentiment behind the language spoken for natural interactions.

Deep Neural Networks (DNNs)

Deep Neural Networks (DNNs) are highly effective with AI for voice recognition specifically for processing and analyzing audio signals for speech pattern recognition. With DNNs, the AI voice detector can understand voice variations in accents, dialects, and background noise.

DNNs also play a role in refining language understanding, enabling systems to handle diverse speech patterns and contexts. Integrating both DNNs with Natural Language Processing (NLP) makes speech recognition better by understanding the semantic meaning and intent of spoken words. Advancements in DNN architectures, such as convolutional and recurrent neural networks, have significantly improved speech enhancement capabilities.

Continuous Learning

Continuous learning is essential for speech recognition systems as it enables them to adapt and improve accuracy over time. Speech-to-text AI models can learn from new examples and refine their language understanding as more speech data is fed into the system. This capability is essential in handling the evolving nature of human language, including new words, accents, and linguistic trends.

What Are the Major Trends in AI Speech Recognition for 2025?

Since the rapid rise of AI in 2021, AI technology has continued to evolve. Here are several key trends that are shaping the future of speech recognition in 2025:

Integration with AI Voice Assistants

Speech-to-text AI voice assistants are increasingly integrating large language models (LLMs) to understand a broader range of spoken commands and respond more naturally. Take MagicLM for instance, this is HONOR's exclusive large language model, powered by LlaMA 2, offering advanced on-device AI capabilities. It excels in features such as Q&A, text creation, and reading comprehension. You can access this AI feature through HONOR Magic V2 along with the latest MagicOS 9 update.

Shift Away from Text-Based Interfaces

The classic chat box AI interface is evolving towards more natural voice interactions, thanks to advances in speech-to-text technology. Users can now talk to AI assistants instead of typing, which makes using them easier and more accessible. However, voice interfaces can sometimes misunderstand accents, background noise, or unclear commands, leading to errors. Handling these errors effectively keeps users happy.

Expanded Multilingual and Dialect Support

AI speech-to-text models are advancing to handle a broader range of languages and dialects, breaking down barriers to global communication. Techniques such as dialect identification and adaptive models are being developed to enhance the recognition and processing of dialectal variations.

AI-powered voice assistants now integrate advanced multilingual speech recognition, enabling them to understand a wide variety of natural language commands and requests. Improved multilingual speech recognition also enhances accessibility by providing accurate captions and transcripts for audio and video content in multiple languages. The democratization of advanced multilingual speech recognition through open-source AI models is expanding access to smaller platforms and developers.

How Are AI Phones Transforming with Speech Recognition?

AI-powered speech recognition is changing the way we use our smartphones. Features like voice-activated virtual assistants, real-time transcription, and hands-free control are becoming increasingly common.

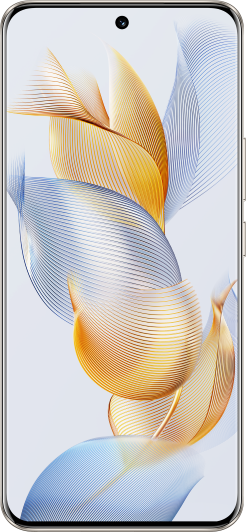

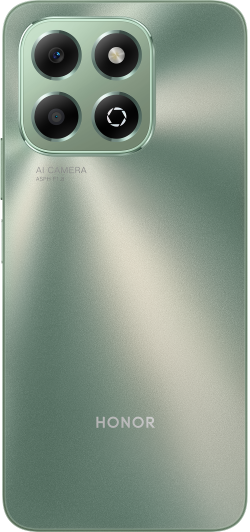

Modern AI-powered devices, like HONOR's AI smartphones specifically the HONOR Magic6 Pro, their newest model equipped the AI assistant, can enhance user interaction through sophisticated on-device AI capabilities.

For example, you're Japanese traveling to Tokyo with your HONOR Magic6 Pro. Upon arrival, you ask your AI voice assistant for directions to your hotel in English, and your phone translates and provides clear directions in Japanese. During your trip, you dictate notes into your phone, instantly turning your spoken words into text, keeping you organized without typing. As you explore Tokyo, you ask your phone about local attractions, and it gives you personalized recommendations based on what you like and where you've been before, making your travel experience easier and more fun.

What Is the Future of AI Speech Recognition Beyond 2025?

Looking beyond 2025, AI speech recognition is set to advance significantly. It will enhance accessibility through natural, voice-controlled interfaces for people with disabilities and improve real-time transcription and captioning for those who are deaf or hard of hearing.

Deeper integration into assistive technologies like screen readers and smart home controllers will also empower users to control their environments more independently. Multilingual capabilities will further expand for better global communication and cross-cultural interactions, while AI's ability to learn user preferences will personalize interactions.

Various industries, including healthcare, finance, and customer service, are adopting AI-driven speech recognition to streamline operations and enhance customer experiences. AI-driven biometric authentication using AI voice recognition will also enhance security in online transactions, protecting against identity theft.

Conclusion

The integration of AI into speech recognition technology has transformed the way we communicate with technology, making it more natural, accessible, and efficient. We can expect even more remarkable advancements in the field of speech recognition specifically shaping the future of human-computer interaction. However, as AI continues to advance, it's important to consider factors like privacy and data security in the widespread adoption of AI speech recognition technology, making sure that these innovations benefit users responsibly.

FAQs

How Does AI Generate Speech?

AI-powered text-to-speech (TTS) technology uses advanced algorithms like natural language processing and deep learning to transform written text into lifelike speech. It works by analyzing the text to grasp its meaning and context, then producing audio that sounds remarkably human, with natural speech patterns and intonation.

What Are Examples of Voice Recognition AI?

Popular artificial intelligence voice recognition AIs include ChatGPT, Google Assistant (Google) and Cortana (Microsoft). These virtual assistants understand and respond to voice commands, allowing users to interact with devices and services without using their hands.

Source: HONOR Club

SUBSCRIPTION

I agree to receive the latest offers and information on HONOR products through email or IM (e.g. WhatsApp) provided below and advertisement on third-party platforms. I understand that I can unsubscribe anytime according to Chapter 5 of HONOR Platform Privacy Statement.

CONTACT

Honor Technology (Malaysia) Sdn Bhd

(Registration No.: 202101003804)

1800-88-5645

9:00 AM - 6:00 PM

Copyright © Honor Device Co., Ltd. 2020-2025. All rights reserved.

We use cookies and similar technologies to make our website work efficiently, as well as to analyze our website traffic and for advertising purposes.

By clicking on "Accept all cookies" you allow the storage of cookies on your device. For more information, take a look at our Cookie Policy.

Functional cookies are used to improve functionality and personalization, such as when playing videos or during live chats.

Analytical cookies provide information on how this site is used. This improves the user experience. The data collected is aggregated and made anonymous.

Advertising cookies provide information about user interactions with HONOR content. This helps us better understand the effectiveness of the content of our emails and our website.